Teaching Race through Cultural Algorithms

At the School of Intercultural Computing, we are exploring the intersection of racial literacy and CS to help students imagine futures less constricted by racialized thinking. One of our key concepts is the idea of cultural algorithms: not where algorithms embed culture, but where culture embeds algorithms. Here we reflect on an activity we tested with a small group of 7th graders as part of a broader unit on Race through Cultural Algorithms.

Many people in computer science (CS) education are turning their attention to issues of racism and race. But many educators are also asking how computing can contribute to students’ racial literacy. Why not just leave racial literacy to social studies? Are there concepts in CS which can help students understand the mechanisms of oppression — not just racism through technology, but race as technology? And if so, how can we teach such alignments in responsible ways?

At the School of Intercultural Computing, we are exploring the intersection of racial literacy and CS to help students imagine futures less constricted by racialized thinking. One of our key concepts is the idea of cultural algorithms: not where algorithms embed culture, but where culture embeds algorithms. Here we reflect on an activity we tested with a small group of 7th graders as part of a broader unit on Race through Cultural Algorithms.

Birdcraft: Tinkering with the Technology of Caste

This activity was inspired by a fictional story in Isabel Wilkerson’s book Caste and adapts a procedure from Carlos A. Hoyt’s ‘technology of racialization.’

Teaching about race and racism often suffers from issues of reification and myopia. Seeing race everywhere might cause us to unwittingly reify it, such as in well-intentioned culture talk or studies on “race relations.” To teach about how race works as technology, it often helps to “make race strange”: present an intercultural, or historical perspective that jars with sensibilities.

To help students get outside their heads, we designed a fictional bird world. Island A has birds with relatively short legs, Island B has birds with longer legs. One day, birds on Island A got greedy and flew to B to get more worms. They imposed a caste system on the Island B birds, defining a sorting procedure for grouping who is Short and Tall, a descent algorithm to sort offspring, and the attributes and treatment of each “group.”

Short, you’re in: the best worms, the best nests, the front of the flock. Tall, wait your turn for worms, and get the worst sticks for nests. Tall legs are considered ugly, awkward; Short legs, elegant, pure. Mix Tall and Short, you get Medium; Medium and Tall, still Tall, and Medium birds are treated functionally the same as Talls. (If Short and Medium mix, we’ll have to re-run the sorting algorithm to decide.)

Our “bird caste system” is algorithmic in structure, having five steps composed of lines of pseudo-code (adapted from the work of Carlos Hoyt):

1. Select

2. Sort

3. Attribute

4. Essentialize

5. Act

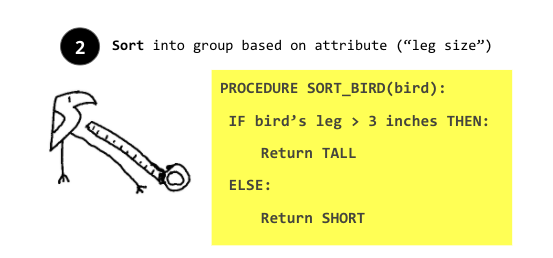

For instance, under Step 2: Sort we had the editable pseudocode:

The algorithms are meant to be full of holes and somewhat ridiculous (e.g., you can’t use the Sort procedure to arrive at a Medium bird, this is decided only from ancestry) — just like in real life. To provide a second example, in Step 4: Essentialize we had the pseudocode:

Activity Setup

After explaining Bird World, we assigned participants bird identities: category (Short, Medium, Tall) and leg size (2–8 inches). We gave some Short birds 3 inch legs (right on the sorting line) and some Tall birds 4 inch legs, to approximate the kind of messiness in real-world categories of race. Participants were put into Zoom Breakout groups by their category and shared an editable Google Slide.

We then tasked groups to make Bird World more “fair.” They could change the worksheet and pseudocode anyway they wanted. We wondered if and how bird identities would play a role in their decisions.

What We Learned

The Shorts (who are dominant) decided to change birdworld in the following ways:

Not change the categories or sorting rules, but rather distribute treatment more “equally” (a.k.a. the “racial equality” route). Talls now got the best nests, but last choice on worms; Shorts the opposite; and Mediums flew at the front of the flock, but their nests and worms were just “okay.” One bird with 3 inch legs argued against changing the rules because they were “just squeaking by” to sort into the Short group.

Allow the offspring of Tall + Medium birds to be Medium, instead of Tall. Their justification? “It’s more fair if more birds can be Medium.”

The Mediums decided to:

Categorize on Wing Span instead of Leg Size. They changed the Sorting algorithm to produce “Big” and “Small” categories, with the dividing line the average bird wing span according to Google.

Label all birds as “friendly,” but label Big birds as “assertive” and Short birds as “passive.”

Add new categories “Medium big” and “Medium small” for describing offspring. One member’s rationale was that more Medium categories might diffuse discrimination (emblematic of debates over multiracial identity in the 90s).

The Talls decided to:

Erase caste categorization itself, arguing that the act of sorting was itself discriminatory.

Assign jobs according to physical attributes, assuming there were limited resources. For instance, larger winged birds for flyers, sharper beaked birds for collecting food, tall legged birds for “workout trainers.” No specific sorting policy was defined for assigning categories.

How can it add value

Students unwittingly began playing out historical debates on race, racial identity, and anti-racist strategy. For instance, a Short-passing bird worried about losing their passing privileges; Medium birds started focusing on mixed-caste identities; and Tall birds questioned racial classification itself. Framed as Birdcraft, the idea that categorization can itself be discriminatory also seemed more obvious than it would be if we talked about race. And because of how we altered the word and setting — birds, not humans; height, not color; caste, not race — U.S. students appeared to talk without the worry of transgressing norms of political correctness.

We are iterating on this activity to reflect on what we learned and how to meet the needs of all students. In the future we imagine Birdcraft sitting near the beginning of a longer unit on Race through Cultural Algorithms. Key is helping students transfer their critical thinking about birdcraft onto analogous real-world structures (which we didn’t have enough time for in this 1 hr session). The goal would be to get students to recognize how “it could be otherwise” and see how racism creates race, not the other way around. Following this, we would lead into project-based activities to address “what we do” with this knowledge.

Conclusion

For many of us, it might be terrifying to imagine students remixing caste systems through algorithmic concepts. But we believe that tinkering with oppressive systems to understand how the hidden gears within them work is a key way CS education can support students to understand systemic racism without naturalizing false notions of race. To be clear, this does not mean living in a colorblind world. Quite the opposite: it means acknowledging and understanding how we have been programmed to assign value to different groups of people, driven by capitalism and exploitation. It means striving for home: a future that invalidates the racist paradigm as it is expressed in our laws, schools, culture, and daily life. Our activities might sound like the start of a piece of speculative fiction written by the legendary Octavia Butler —and that’s no accident. Innovative work around visionary fiction and social justice can provide us with the imaginative resources to realize better futures with and through computing concepts.

Interested in this work? We will begin holding quarterly testing sessions open to the public and can make our resources available upon request. Learn more here.